There is a lot of history surrounding the encoding and decoding of data. Fundamentally, this concept revolves around how to store and render letter characters. As you know, all things that are computed must be constructed from either a 0 or a 1—a bit. Then you build sequences of those two numbers into, most commonly, 8‐bit bytes, which can then be used to represent a character. You also likely know that the maximum possible unique bytes you can have using 8‐bits is 256 (28), a number you will see in a lot of places. Early on in the era of computing, the mapping to characters was focused on English characters. Using ASCII or HEX, a character string like brainjammer can be converted into a set of numeric values. Then those values can be further converted into binary.

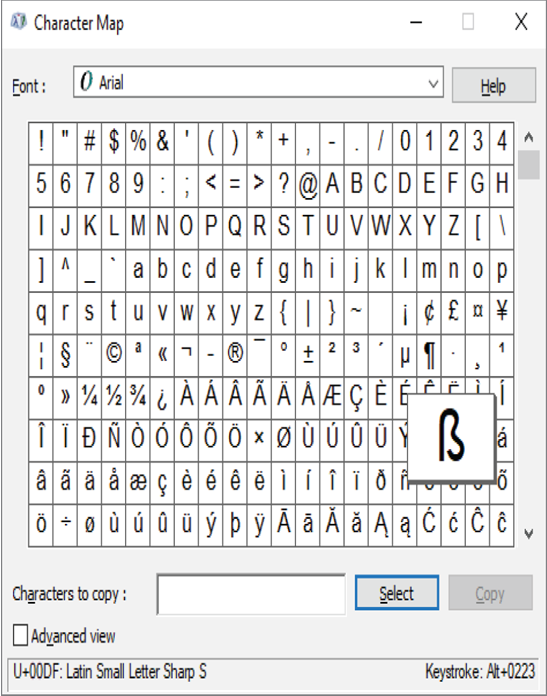

It was decided to map all English characters to ASCII using the numbers between 32 and 127 within the available 256. Moving forward a few decades, it turns out that as the world began to open up, it was learned that many written languages have more than 256 characters and require more space than an 8‐bit byte. An attempt was made to double the number of bytes, which would effectively deliver 16 bits, which is 65,536 (216) possible characters. This character recognition approach is referred to as Unicode, and on the Windows operating system, you can see this in a tool named charmap. When you enter charmap into a command prompt, the result resembles Figure 5.24. Following Figure 5.24 you see the conversion of brainjammer into Unicode.

FIGURE 5.24 Encoding and decoding data Unicode

U+0062 U+0072 U+0061 U+0069 U+006e U+006a U+0061 U+006d U+006d U+0065 U+0072

There were some concerns about Unicode having to do with its size and some questionable internal technical techniques for converting bits to characters. Those concerns were enough to drive the invention of encodings and the concept of UTF‐8. The introduction of UTF‐8 resulted in the ability to store Unicode in code points between 0 and 127 into a single byte, while all code points above 127 could be stored into two, three, or more bytes. First, a code point is a theoretical term used to describe how a letter is represented in memory. Second, the two, three, or more bytes that UTF‐8 supports are for characters in languages that have greater than what can fit in a single byte, i.e., 256. Therefore, in addition to the encoding concept evolving around how to store and render letter characters, it is mostly concerned with the proper rendering of non‐English character sets.

If you remember from Exercise 4.11, where you built a set of external tables, you ran the following COLLATE command along with the CREATE DATABASE command. The COLLATE command, along with its collation, sets the character encoding rules used for storing data into the database. The rules include locale, sort order, and character sensitivity conventions for character‐based data types. This is not a supported collation type for a dedicated SQL pool, and the collation type cannot be changed after the database is created. Both are possible when creating external tables on a serverless SQL pool.

COLLATE Latin1_General_100_BIN2_UTF8

To work around the lack of UTF‐8 support with dedicated SQL pools, you need to know about VARCHAR and NVARCHAR data types. A data type identifies what kind of value a data column contains. Simply, a VARCHAR data type stores ASCII values, and a NVARCHAR data type stores Unicode. You read previously that ASCII maps characters to numeric values between 32 and 127, which means there is limited to no special character support. This is where Unicode and NVARCHAR come into play. Unicode supports storing character strings using two bytes instead of the ASCII one byte. Therefore, to support some languages, you need to use Unicode. To gain some hands‐on experience, complete Exercise 5.9.